Resources

Intrinsic Values

Intrinsic Values

What do we really value? Are our values reducible to just one or two things, or are they more complex? What does it mean to value something? In this talk from EA Global 2018: San Francisco, Spencer Greenberg argues that we each have a set of multiple intrinsic values, and that it's worth it to reflect on them carefully. A transcript of Spencer's talk is below, including questions from the audience, which we have lightly edited for readability.

The Talk

I have three propositions for you. First, that you probably have multiple intrinsic values. Second, that most people don't know what all of their intrinsic values are. And third, that it's useful to know your intrinsic values. It's worth investing the time to try to figure them out. These are the three things I'm going to explore with you today.

What is an intrinsic value? It's something we value for itself, not merely for its consequences. Or another way to think about it, is that we would continue to value an intrinsic value even if it were to cause nothing else. So in some sense, our intrinsic values are things that - according to our own value system - we want there to be more of in the world. Now I'd like this to be interactive to make it more interesting, so when you see this blue symbol I'm going to ask you a question. If your answer is yes, I'd like you to raise your hand in the air, and if your answer is no, I'd like you to put your hand on your shoulder.

My first question is, is money an intrinsic value? I'm seeing a lot of nos out there. So why do we care about money? Well, money lets us do things like buy a car, or buy food. Buying a car might give us autonomy; buying food might give us nourishment and pleasure. But imagine we were in an economy with hyper-inflation where you literally couldn't use the money for anything, it basically becomes paper at that point and we can't get any of the things we want out of it. I think in that case, most people would say it actually has no value. So I would say money is not an intrinsic value, because it doesn't get us anything. Once we screen off all the effects of it, we no longer value it.

My second question is, what about one's own pleasurable experiences? Are they an intrinsic value for most people? Suppose that someone had a mind blowingly enjoyable experience, and someone else said, "But I don't get it, why do you care about that? You didn't get any party favors, or any other effects from it." I think people would generally say, "Well what are you talking about? The value was the pleasurable experience, right? I value it intrinsically."

Now there are a lot of drivers for our behavior, like our habits, learning from rewards and punishments, social mimicry and biological responses. Intrinsic values are another one of these drivers for our behavior. I like to think of it with a metaphor of a lighthouse. Most of the time we're out at sea, and we're just focusing on the rowing that we're doing. We're trying to dodge the waves. But every once in a while we look out in the distance and we think: What the heck are we trying to get to? Why are we doing all this rowing? Where are we going? And that's kind of what our intrinsic values are like.

So we've defined intrinsic values so far in relation to the things we value. But we haven't really talked about what it means to value something. So let's talk about that a little. Well first, a question for you. In the video game Pacman, do ghosts have the value of catching Pacman?

A lot of uncertainty in this audience. Well, I think it depends on your perspective. One way to think about a value is as a model of an agent's behavior. And insofar as the chosen model is good, you could think of the agent as having that value. A second way to think about a value is, it's something that's part of an agent's utility function. Now imagine that Pacman had been implemented in the hot days of AI that we're in right now, and they might've used machine learning to develop the ghosts' "internal brains". And if they did that, the ghosts might literally have a utility function, and there might be a machine learning algorithm that's trying to optimize closeness to Pacman. And in that case we could really say that maybe it really does have the value of catching Pacman, in some sense.

But from the point of view of this talk, my preferred perspective is a little bit different. It's that valuing is something our brains do, so there's something that it feels like to have a value. And in that sense, the ghosts do not have value. Intrinsic values then become a subset of the things we value.

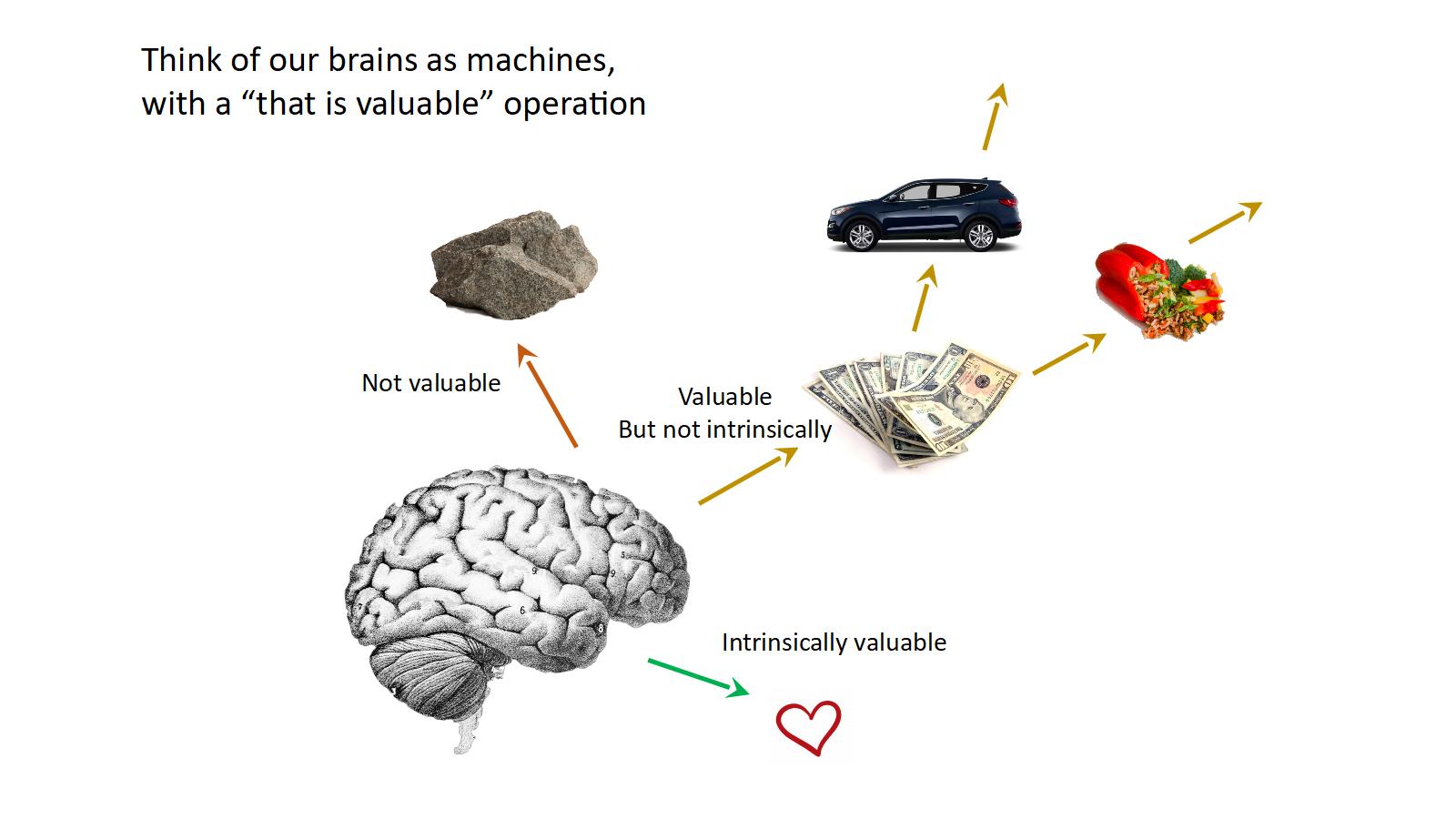

Let's think about this for a second as though our brains are machines, which in many ways they are. So our brains have this operation that is "valuable" that they can assign to things. So you could look at a stone and say, "That is not valuable." You could look at money and say, "That's valuable." But oh wait, if I think about all the reasons I find it valuable it turns out that they're just because of the effects. So if I'm not allowed to get those effects - it's not valuable. So it's valuable but not an intrinsic value. And then there might be other things that you look at and you say: That's intrinsically valuable. Or maybe when you reflect on them, you think they are intrinsically valuable.

So what's the point of all this? Why try to understand our intrinsic values? I'm going to give you five reasons why I think it's important to try to understand intrinsic values.

Five reasons to care about intrinsic values

First, is what I call avoiding value traps. A value trap is when there's something that you associate with intrinsic value, or that might in the past have been related to an intrinsic value of yours, so you seek after it. But then it doesn't end up giving you the thing that you actually intrinsically value in the long-run.

So imagine someone who takes a high paying career in finance, and the reason they take it is because when they're young, they associate lack of money with lack of autonomy, and they really value autonomy. So they go and they take this career, but after working their ass off for 30 years, they suddenly realize they've actually given up their autonomy. Early on, it was associated with the intrinsic value. But it stopped being associated, and through inertia the person kept doing it. So they fell in a value trap.

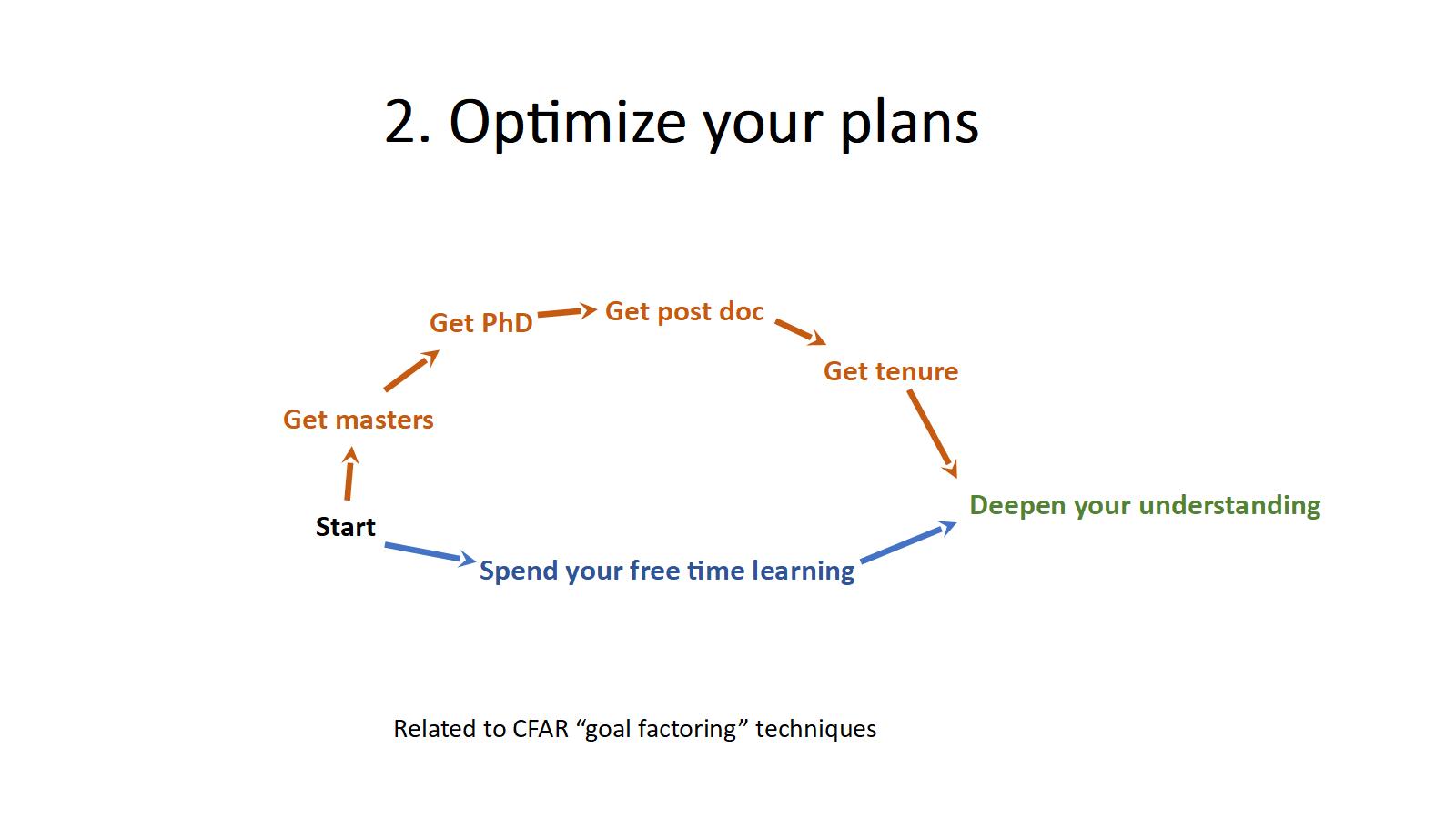

A second reason to care about your intrinsic values is to help you optimize your plans. For example, maybe we have this deep intrinsic value of deepening our understanding of the world. And we associate this with being a tenured professor. But maybe we don't realize that that's why we want to be a tenured professor, right? So we come up with this elaborate plan: I'm going to go get my master's degree, I'm going to get my PhD, I'm going to get my post doc, and eventually I'll become a tenured professor. But maybe there's a much more efficient route to this plan, like just spending your free time doing the deep learning you want to do. Maybe this is going to get you most of what you want in much less effort. This, of course, is related to CFAR's goal factoring techniques, if you've ever done a Center for Applied Rationality workshop.

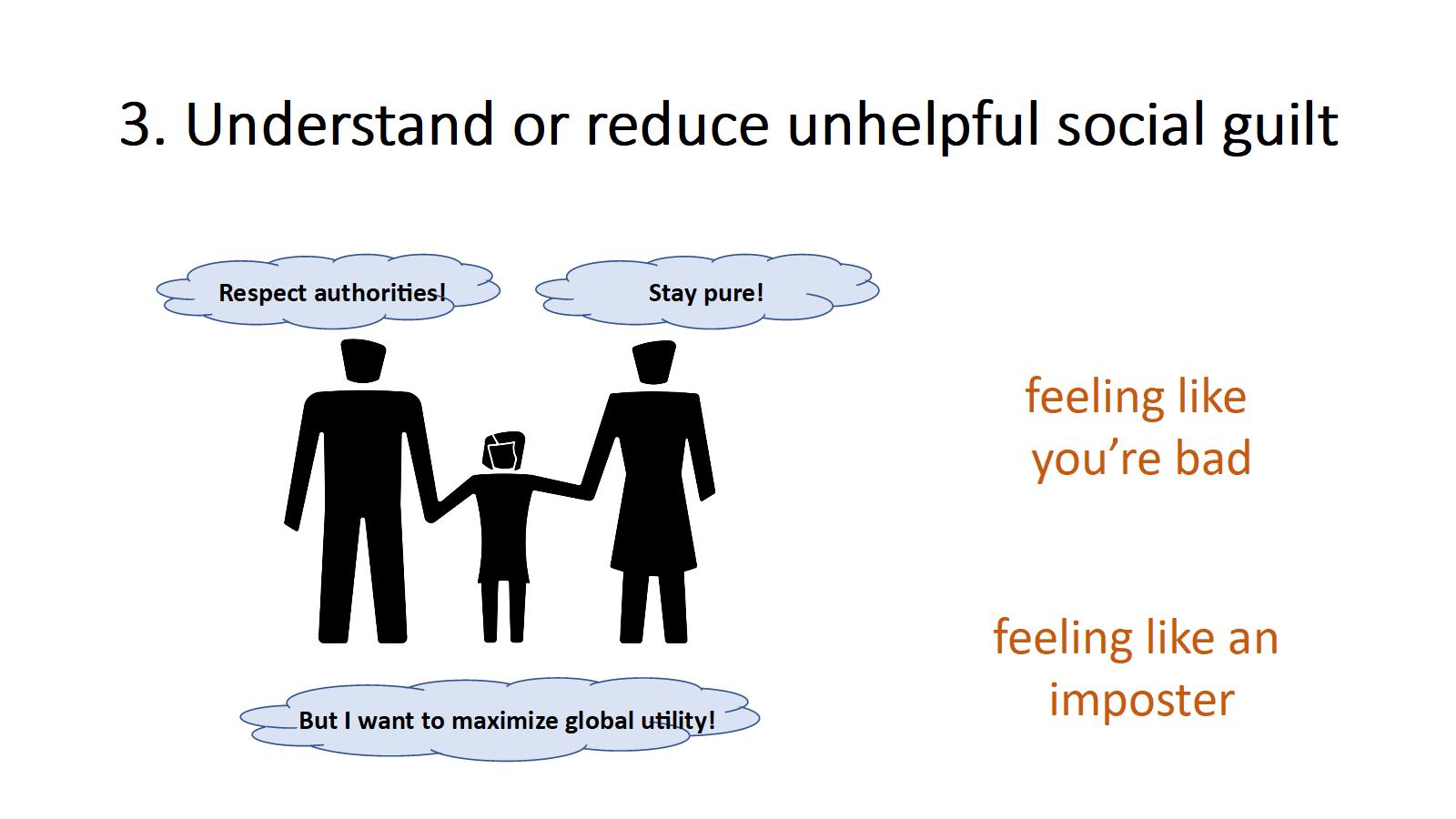

A third reason to try to understand your intrinsic values is that it can help you understand or educe an unhelpful social guilt that people often have. As you grow up, people tell you about what's valuable. Your parents do, your friends do. Even as an adult, the social circles around you are teaching you about what's valuable. But what happens if your intrinsic values differ from what the people around you tell you is valuable? Well, I think it could lead to a lot of weird psychological effects.

You feel guilty, you feel like you're a bad person, you feel like you're an imposter. But you can take a step back and start reframing this in terms of intrinsic values and say, "Huh, my intrinsic values that I have may just be different than those of the people around me. My parents might have taught me to respect authority, maybe my friend group teaches me that I should be pure, according to some definition of pure, but maybe I just wanna maximize global utility", or something like this. So you end up in a situation where you have different intrinsic values. And once you realize that, you're like, "Okay, I'm not a bad person, I'm not an imposter, I just have different intrinsic values." And then you might actually be able to relate to your community more successfully.

A fourth reason that I think it could be important to understand your intrinsic values is to reduce a sort of irrational double-think that I've noticed people have sometimes. So, I want to read a couple statements for you:

- "The reason I cultivate friendship is because it helps me improve the world."

- "The reason I do fun things is so I don't burn out."

Now I don't know about you, but I don't buy these things. Sure, hypothetically doing fun things might prevent you from burning out, but I don't really think that's why people do fun things almost ever, right? But I do sometimes hear people say things like that. So what's going on?

Here's my theory: People have multiple intrinsic values, but in their social world, there might be only one or two that are socially acceptable. Or maybe they've studied philosophy and they've convinced themselves that there's only one true universal value that's important. So what happens is they take their own intrinsic values, and they try to shove them onto these acceptable values, and you get really weird things like this. It's kind of a self-deception. I don't think people are intending to be manipulative or anything like that, I think it's a self deception and I think it's potentially bad.

So I'm going to make a point that I think is very important, which is that what you think you should value, or what you believe is the objective universal truth about value, is not the same as the entirety of what your intrinsic values actually are. So when I'm referring to intrinsic values, I'm talking about an empirical fact about yourself, about your brain. And the moment that you decide that something is a universal value - the one true value - it's not like your brain immediately flips into thinking that's the only thing that's valuable. Right? So those are different things. You might aspire to have your values be a certain way, but it doesn't mean that they are that way. And you might try to shape them to make them more that way, but that's a long process over time, and you'll probably never get fully there anyway.

The fifth and final reason that I think it's useful to try to understand our intrinsic values is because it can help us figure out, more specifically, what kind of world we want to create. If we're thinking about improving the world on the margin, there are a lot of things we could do that are very obvious, that we could all agree on. We want to reduce disease, we want to make people suffer less, and so on. But when you start thinking about what world you actually want to create, it's insanely hard. If you attempt to do this, and you have one or two primary values you design this world around, I think you'll notice that you get in this really weird situation where you've designed this optimal world and it doesn't feel optimal. And you're like: That's actually a scary world. And you show it to other people and they're even more freaked out by it than you are.

Why is this? Well, I suspect it's because we have multiple intrinsic values. So if we're sort of focusing on one or two of them, and we try to build an optimal world around that, it ends up being really weird and not feeling like that great a world. This becomes even more important if we're trying to create a world that other people want to live in too; we have to take into account broad spectrum of intrinsic values, and try to work with them to figure out what we want the world to look like. I think it would be really exciting for effective altruism to have more of a positive picture of what kind of world we want to create, and not just a negative picture of the world we're trying to repair. I think that would be an exciting project.

How can we learn our intrinsic values?

All right, so all this comes with a huge caveat, which is that it's very tricky to figure out actually what your intrinsic values are. We have to check really carefully to make sure we've really found them.

So for example, let's say you're trying to decide if you value a huge pile of gold coins. And in doing the thought experiment to see whether it's a value or an intrinsic value, you've properly screened off the fact that you're not allowed to use it to buy a pirate ship, and you're not allowed to use it to make yourself king. But you're accidentally kind of implicitly assuming you can build a gold statue of yourself. So you think you intrinsically value it, but actually you just value the statue. You have to be careful with these kinds of things.

A second warning is you have to make sure you deeply reflect on your intrinsic values and see if they change. I think that they really do change through reflection, and you kind of don't want to go all in on one while it's still in the midst of changing a lot. Plus, our brains are pretty noisy machines; maybe it's influenced by what you ate for breakfast, maybe you want to wait until tomorrow before making a huge life decision based on it. So I think you really have to dig into these things, and really think about them.

Third, you have to be careful not to confuse something being an intrinsic value with something just giving you positive affect. So for example, you might really like cute kittens playing with yarn, but they're probably not an intrinsic value. You probably don't want to spread kittens playing with yarn over the whole universe, for example. So you might have an intrinsic value of the pleasure you get from watching a kitten play with yarn, you might have an intrinsic value related to the kitten enjoying playing with yarn. But you have to be careful to separate these very similar seeming psychological phenomena.

All right, so what the heck do people's intrinsic values actually look like? Well, you can categorize them into three groups.

- Those about the self;

- Those about the community, which are basically individuals that are special to you in some way, like your friends or family;

- Universal ones that don't relate to yourself or the community.

Now I suspect that most people have all three types of intrinsic values. People are not just about their self usually - though there might be exceptions. They're not just about community usually either, and so on.

Now the interesting thing about universal intrinsic values is they create a reason for strangers who've never met to cooperate, because they both really care about producing something in the world that has nothing to do with themselves, and nothing to do with people they know, even. So you could have strangers all around the world in a kind of global community all trying to support one value. That's pretty interesting.

I want to give you a few examples where this might be happening. One is classical utilitarians; they might have happiness and non-suffering as one or two of their intrinsic values. Or libertarians might have autonomy as one of their intrinsic values. And I want to dig into the autonomy one a little bit, because I think for some libertarians, probably really what it's about is the beneficial effects of autonomy. But I suspect that there are some libertarians where it's really an intrinsic value. So if you say, "But why do you care about autonomy?" That's like asking someone, "Why do you care about your own pleasure?" What is the answer to that question? If it's an intrinsic value, it's very hard to answer that. Because it's sort of like, it's not clear there's anything underneath that.

Or take social justice advocates. I think when they fight for equal treatment, obviously there are a lot of beneficial effects of equal treatment. But I suspect for some social justice advocates, they care about equal treatment intrinsically as well, not just for its positive effects, but they think it's good in and of itself.

Experiments in search of intrinsic values

Now, I ran a study to learn about the frequency of 60 possible intrinsic values. This is a photograph of me that someone took while I was running the study. Why was I so sad? It's because it's really hard to measure people's intrinsic values. Really, really hard. So you have to take what I say here in my study results with a large grain of salt. I did work pretty hard to try to screen out people that didn't properly understand their intrinsic values. But still, you have to take this with a grain of salt.

So, in my US sample, here are a few of the intrinsic values related to self that people reported. 74% reported that "I feel happy" is an intrinsic value. 63% that "others love me". 62% that "people trust me".

On community intrinsic values, 69% of people reported that "people I know feel happy" is an intrinsic value. 50% that "people I know suffer less". And 44% that "people I know get what they want".

In terms of universal ones, 60% reported that "humans are kind to each other" is an intrinsic value. 57% that "humans have freedom to pursue what they choose". And 49% that "people suffer less".

Okay, but what intrinsic values do effective altruists have? I actually got a ton of effective altruists to fill out this survey, and I was able to analyze it using a regression to look at what intrinsic values are associated with being an effective altruist, controlling for your age, gender, and political affiliation. It turned out all of the effects I found were around increasing the happiness or pleasure, or reducing or decreasing the suffering of conscious beings. So maybe not a huge shock there, but somewhat validating for the technique, at least.

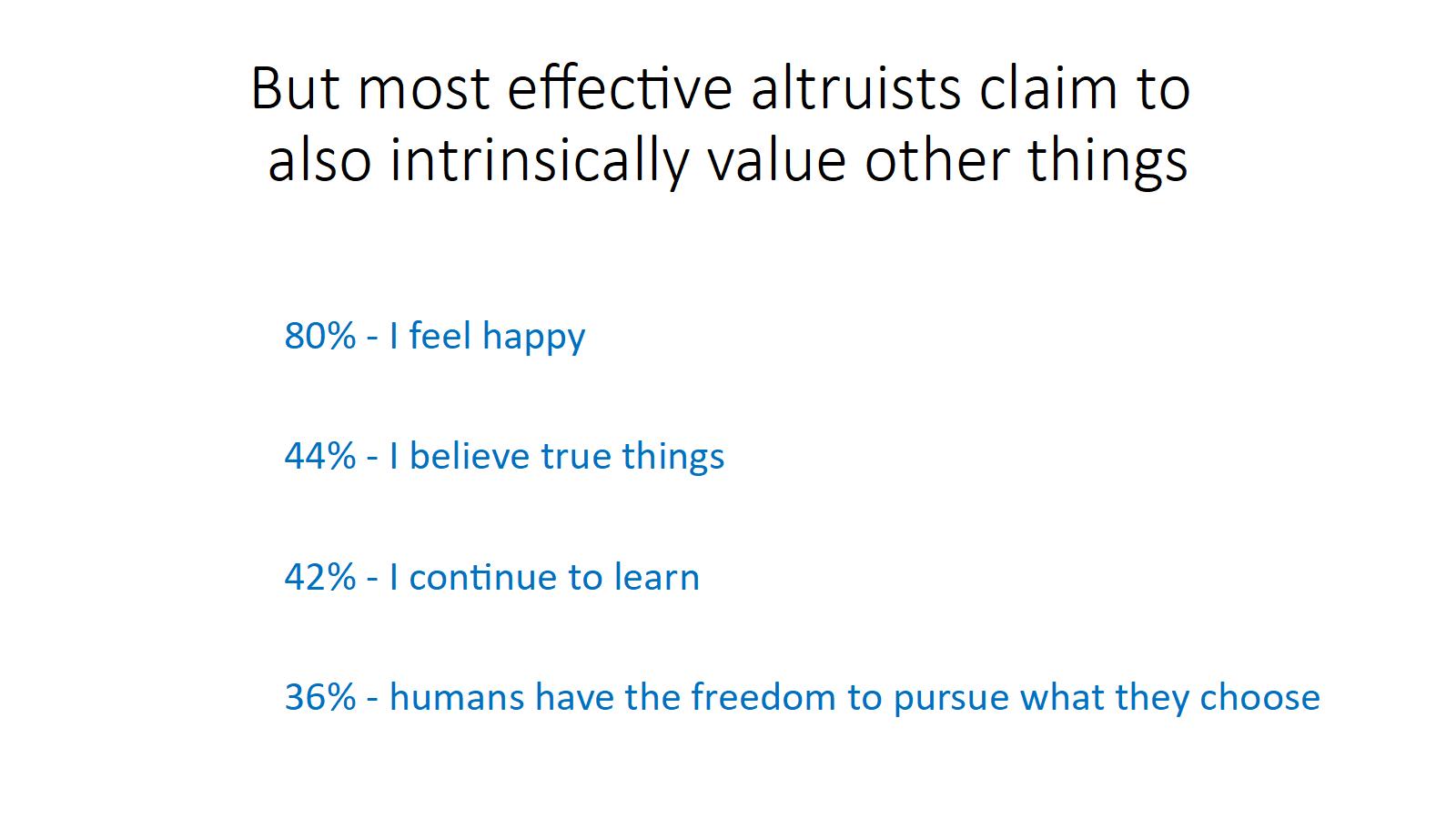

But perhaps a little bit more interestingly, most effective altruists actually reported some other intrinsic values as well. So for example, 80% reported "I feel happy" as an intrinsic value. 44% of effective altruists reported "I believe true things". 42% that "I continue to learn" as an intrinsic value. And 36% that "humans have the freedom to pursue what they choose". And there were some others as well.

Introspecting for your own intrinsic values

All right, so what the heck do your intrinsic values look like? I'm going to do something really mean, where I'm gonna make you actually think about it for one minute to try to come up with three guesses for your actual intrinsic values right now. So take a minute, try to come up with three guesses for your intrinsic values. I'm going to literally wait a minute, go.

All right, so clearly that wasn't nearly enough time to do this properly, but, raise your hand if you were able to come up with three guesses for your own intrinsic values. Oh, a lot of people actually came up with three guesses. That's great. All right. So now I want to give you a few thought experiments for helping you further explore your intrinsic values, and kind of get your intuition pumping.

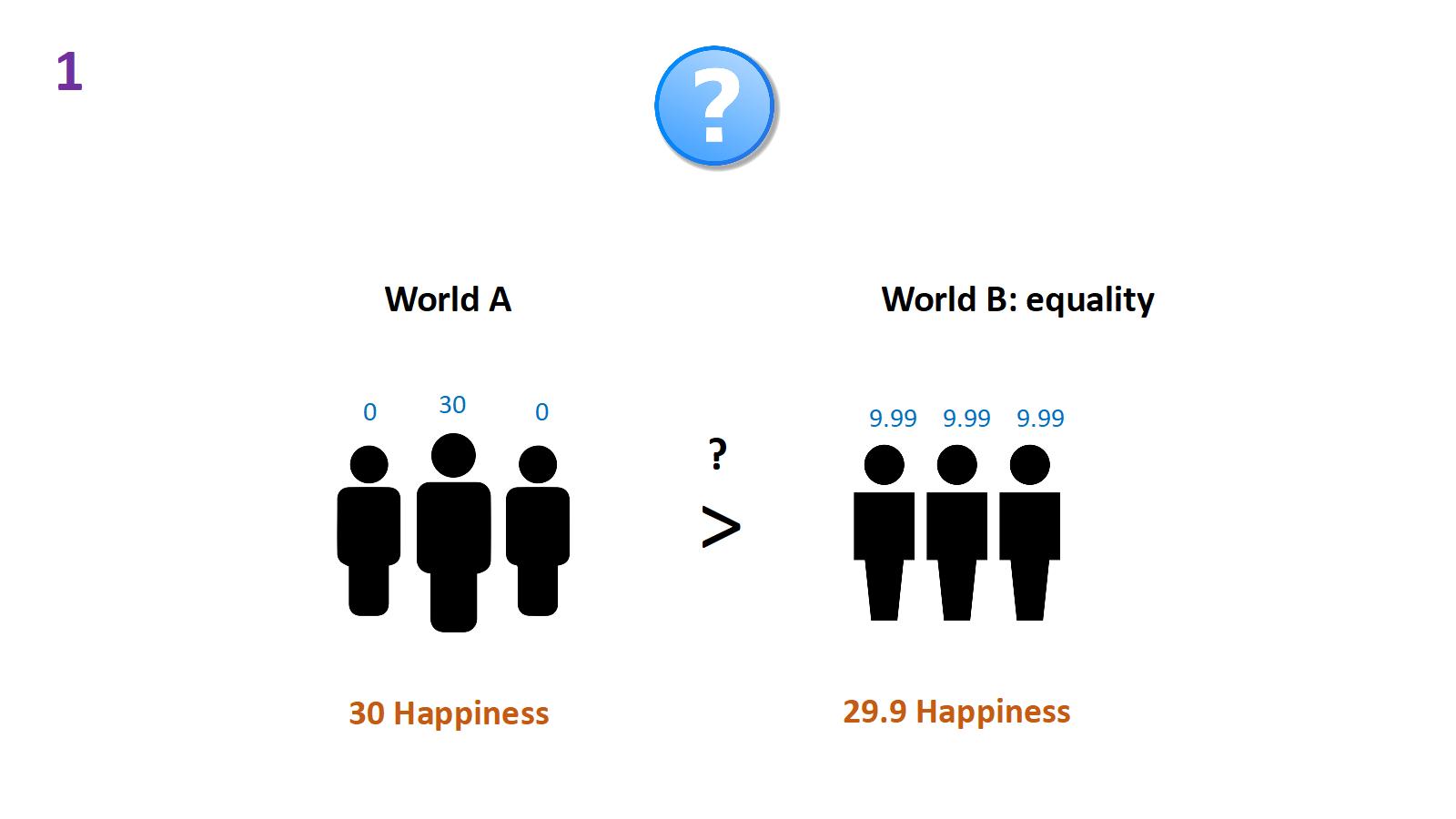

The first thought experiment, I want you to imagine two worlds: world A and world B. In world A there's 30 happiness, but it's all concentrated on one person. There's only three people in this world, right? In world B, there's 29.9 happiness, so you've given up a tiny bit of happiness, but the benefit is that you've spread it out equally between everyone. 9.99 happiness to each person, okay? So the question is, is world A better than world B? Raise your hand if you think yes, put your hand on your shoulder if you think no. Interesting, some disagreements there.

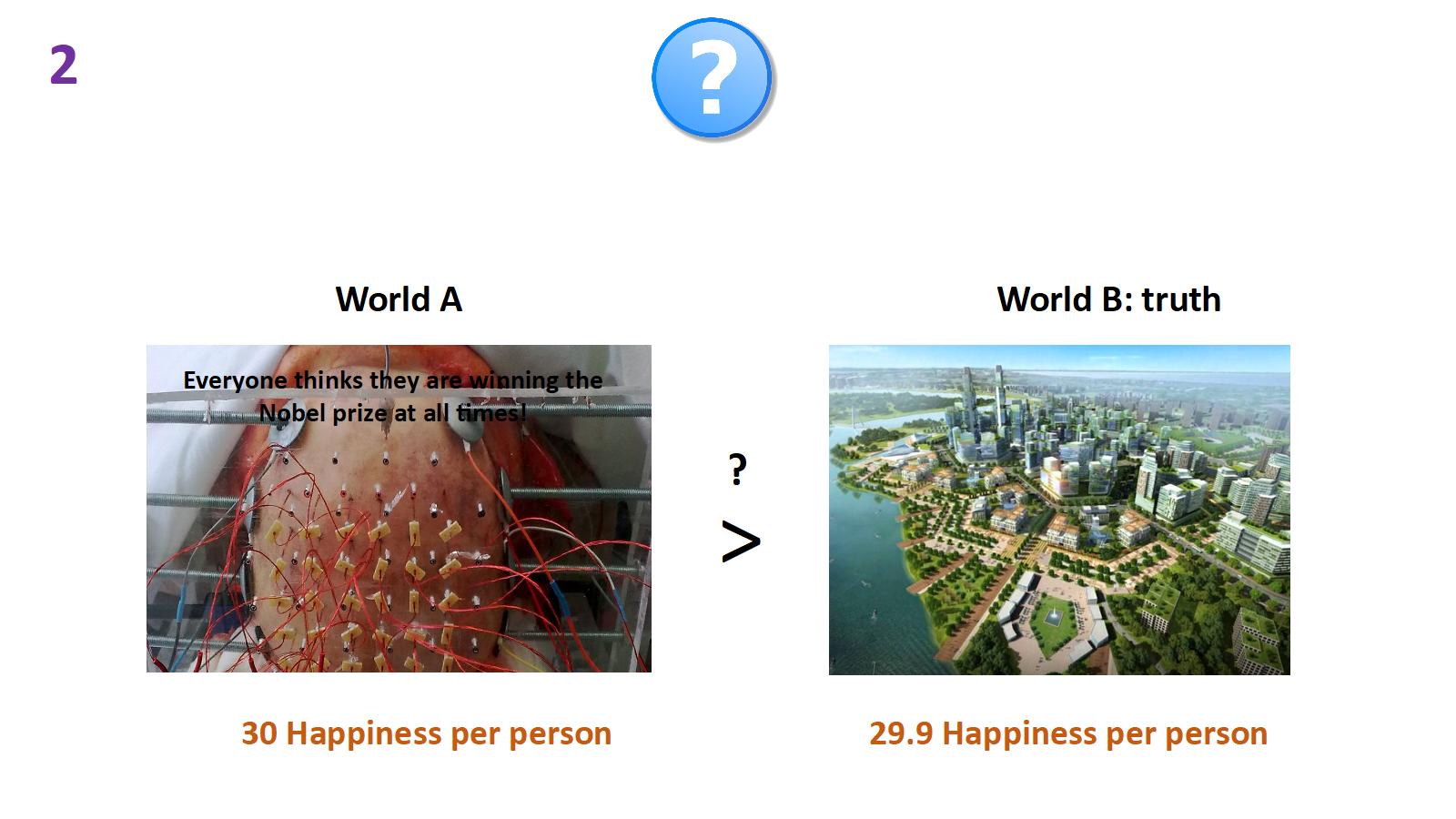

All right. Second thought experiment. World A, there's 30 happiness per person. World B is slightly less, 29.9 happiness per person. But the difference between these worlds, in world A, everyone's hooked up to a machine that's convincing them they're winning the Nobel Prize every second of their life; that's why everyone's so happy. World B is just a really nice place to live, and people basically have pretty accurate beliefs about what's actually happening around them. They don't think they're winning the Nobel Prize all the time.

So is world A better than world B? Raise your hand if you think yes, put your hand on your shoulder if you think no.

Interesting, I'm seeing some more nos on that one. Okay.

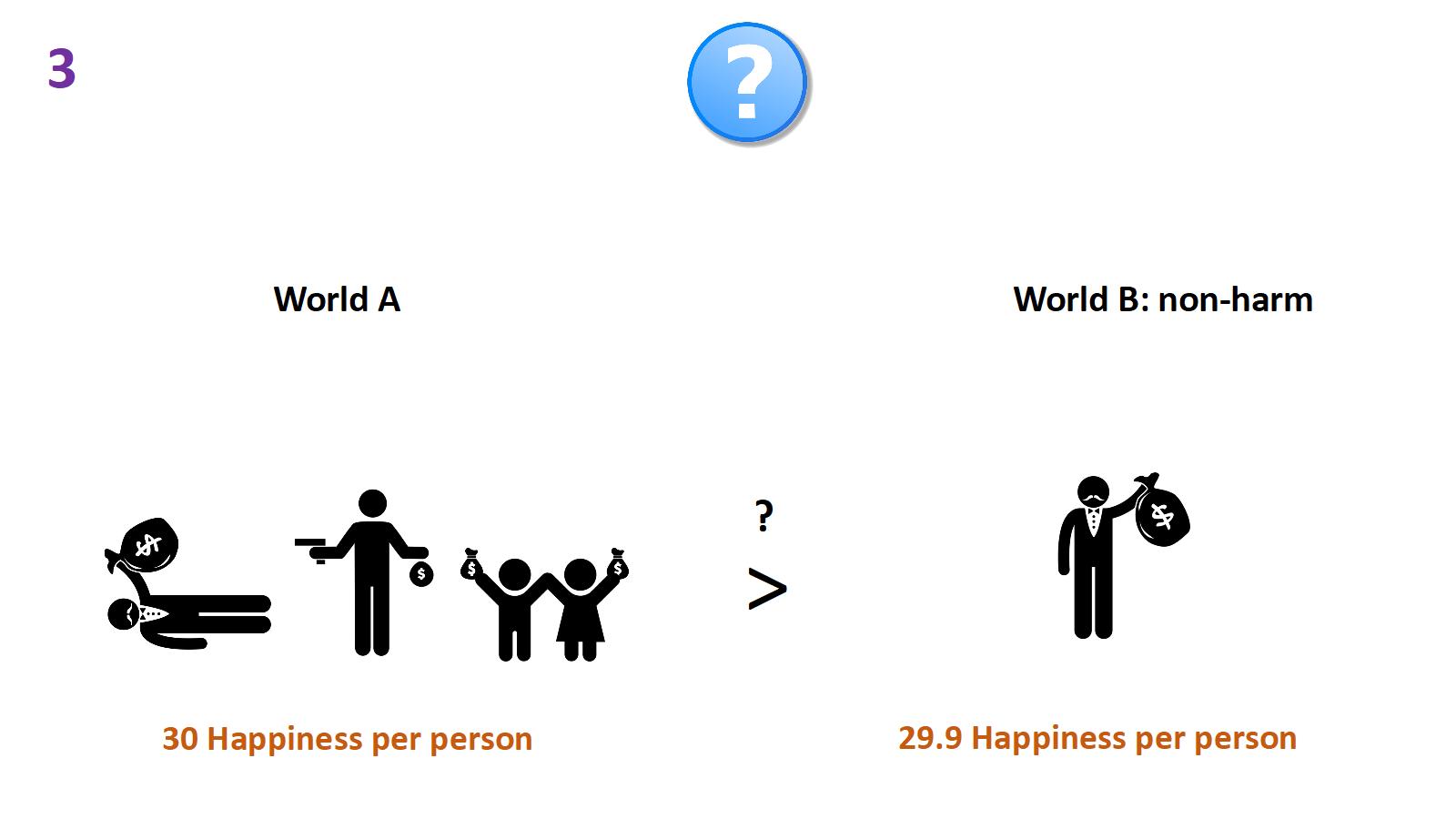

Third thought experiment, again two worlds, world A there's 30 happiness per person. World B there's 29.9 happiness per person. But the reason that world A is slightly happier is because this crazy person figured out that by doing an insanely large amount of harm you could cause a slightly greater amount of happiness to occur than the harm you just did. So this person went out and brutally harmed tons and tons of people. But then that effect was that they made the world slightly happier on average. So okay, so if you think world A is better than world B, raise your hand. If you think otherwise, put your hand on your shoulder. Interesting, all right.

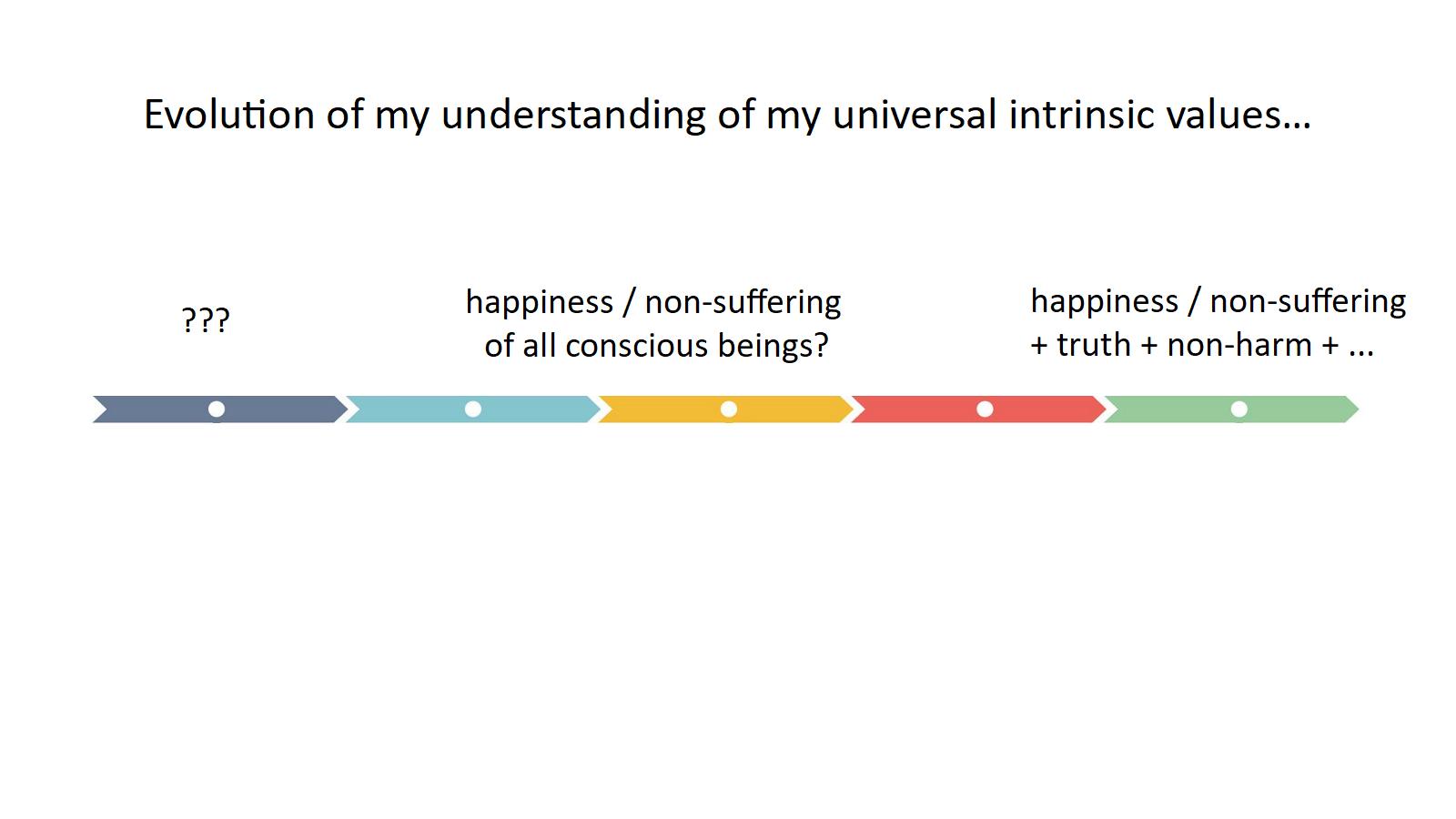

My understanding of my own intrinsic values has evolved over the years. When I was young, I couldn't even speak. Eventually I started thinking about these things; I didn't know what my intrinsic values were. After reading some philosophy, I eventually started thinking that all of my universal intrinsic values that weren't related to myself or my community were about the happiness and non-suffering of all conscious beings - philosophy sometimes does this to people. But as I thought about it more and more and really started to reflect, I think I was actually making a mistake. I think I was mis-modeling my own brain. And I think my universal intrinsic values are actually significantly more complicated than that. I think a very large chunk of them has to do with the happiness and non-suffering of conscious beings. But I think I have other chunks to that value system that involve people believing true things, not doing tons of harm on the way to trying to help people and things like that.

My personal mission is actually sort of a direct application of my intrinsic values, in a sense. I founded this company Spark Wave: What we do is we create novel software companies from scratch, to try to make the world better. We go out and we build a first version of each product, and we'll go and recruit a CEO to actually try to work on running that product and put it out there in the world.

Just to give you an idea of some of the things we work on, two of our products are very much focused around reducing suffering, so UpLift is trying to help people with depression feel less depressed. And Mind Ease is trying to take people who have really bad anxiety and make that anxiety significantly better when they need it.

Two of our other products are really focused around accelerating the search for the truth. One is Clearer Thinking, which is at clearerthinking.org if you want to check it out. We have about 20 free tools that help you think about your life, try to make better decisions, try to reduce your bias and so on. And Positly, which is a new platform we built for helping people run research studies to try to accelerate the pace of social science.

So I'd like to leave you with all this, with just three ideas. One, you probably have multiple intrinsic values. Two, you might not yet know what they all are. And three, that it's really worth spending some time to explore them.

Thank you so much.

And if you're interested in my work, feel free to check it out on Facebook, at https://www.facebook.com/spencer.greenberg.

Questions

Question: I noticed in the last couple of talks I've seen you give, you've cited studies done on Mechanical Turk, which I imagine gives you the lowest research budget of maybe anyone ever. You pay like 10 cents, or a dollar, to each respondent. Maybe you could give us a little peek into your method of using Mechanical Turk, and how generalizable you think that might be for investigating things like that.

Spencer: Yeah, that's a great question. So for example, in this study it was a combination of Mechanical Turk and people on Facebook. I got my EAs from Facebook, because there aren't too many of them on Mechanical Turk.

With Positly, which is one of our products I mentioned, our goal is to make it really easy to recruit people really rapidly for studies. Ultimately, our goal is that you're going to be able to plug into all different diverse populations, through one really easy to use platform. That's where we're moving.

But right now, Mechanical Turk is one of the fastest, easiest, least expensive ways to get a sample. And one of the interesting things about the Mechanical Turk population is it actually matches the effective altruists in a bunch of variables. I've investigated this closely. On age distribution it's actually closer to the EAs than the US population, in liberal-ness, And a few other ways as well. So I think it's certainly not a perfect population, but it actually is kind of a better control group than you'd think for EAs. And also, our plans are to try to make that kind of research really, really cheap, but also getting really high quality representative samples and things like that. Ultimately that's where we want to move.

Question: When you think about intrinsic values, the word intrinsic obviously suggests some sort of fundamental nature. But I find myself kind of not sure if I think they're sort of embedded in my brain and the result of natural selection over obviously a long time, or if they more cultural. So I'm kind of torn as to where do I think these things really come from, even in myself.

Spencer: Yeah, it's a good question, and it's really hard to answer. So what I'm talking about is a psychological phenomenon where you notice how we value some things, and we don't value other things. What is that? It's difficult to talk about. I do think it's influenced by all of the above. I think it's probably evolutionarily influenced, I think also partly culturally. I think they can change; I think mine have changed to some extent as I've reflected on them. And I think certain thought experiments actually can make them change in a way where you resolve inconsistencies and kind of find bugs in your value system.

For example, I think a lot of people have this value like: "suffering is really bad", but they never really considered the scope, and the question: "Is 1,000 people suffering just twice as bad as one person suffering?" Or maybe it's a lot more than that. So you can kind of do these thought experiments, and you start to realize, "oh maybe I had some inconsistency of that value", and can kind of flesh it out. So it's a really complicated question. Yeah, I wish I had better answers. But this is the best shot I've got at it so far.

Question: It feels like you're sort of describing a trajectory where we start with very complicated messy values, and refine them to a point. But also you sort of sound a note of caution that you don't want refine them down to too few, because then you sort of miss the point as well.

Spencer: Well you don't want do it mistakenly. Like in that I think a lot of people jump the gun, and they think oh, I figured out the one true value, therefore that's my values. And actually they're describing two things. They're describing their explicit belief about what values are, and then this other thing, which is what their internal value system actually does. They gloss over that distinction. I think that's unhealthy.

Question: How do we avoid collapsing all of our values into the feeling of having values satisfied? Like there's this sort of sense of okay, I got what I want, which you can get from a lot of different things. But that - maybe it is - but it seems like we don't really want that to be our base value.

Spencer: Well, that's a good question. I think that humans have some values that are very satisfiable values. And others that just sort of are insatiable, right? Like if you have this value that there shouldn't be suffering in the world, well good luck satisfying that, right? Hopefully you're trying, but it's going to be a while. So, I'm not too worried about that, and then I think some of the values are more stable. And it's true, maybe you could try to suppress those values, and promote ones that you could easily satisfy, and maybe that's not great.

Question: What advice do you have for people who are trying to balance or prioritize between multiple conflicting values that they do feel to be intrinsic?

Spencer: That's a good question. And I think that sometimes some of the hardest decisions in our lives are when we actually have multiple values coming into conflict. And I have some memories from my own life where that's happened. And you know, when I think actually this is perhaps the sixth reason why it's useful to think about your intrinsic values, is because you can be in this deep conflict, and not really recognize what's going on. And what's going on might be value A of yours is in direct conflict with value B, and you're being put to the test on how you balance those two things. It's really hard. So I don't have a nice answer of how you do that, other than to really reflect on those two values and say: How much of this am I willing to give up for how much of that?

Question: What's a moment or story from your own life where you have been forced to question and update your intrinsic values?

Spencer: Yeah, so I think when I was younger I was like - because I kind of thought of myself as a utilitarian - I was kind of convinced the only thing that's really important in a universal sense is utility. And I kind of thought that was the way my brain worked for a little while. I think I was confused about that. Then I think I would've been more okay with certain things where you're like oh, we're going do this thing, and it's not going be great for the world, but then later it will eventually make up for it. And I think now I'm much less willing to consider things like that, because basically I think I actually have a fundamental value towards not causing substantial amounts of harm.

I think people in the EA community, they sometimes think about veganism as a utilitarian thing, for example. But there are other ways to frame utilitarianism. Like as in: don't just constantly cause tons of harm, right? And that's sort of a different value that maybe supports that lifestyle choice. So you know, there's different ways to look at a lot of these things.

Question: Do you have a finite list that are your working set of intrinsic values that you go around?

Spencer: I feel like I'm still working on it. I think our minds are so messy and complicated that this is a process you go through. And I think I have a much better understanding than I used to, but I don't think I have a complete understanding of my own mind in this way.